Search Strategies for LLM Inference

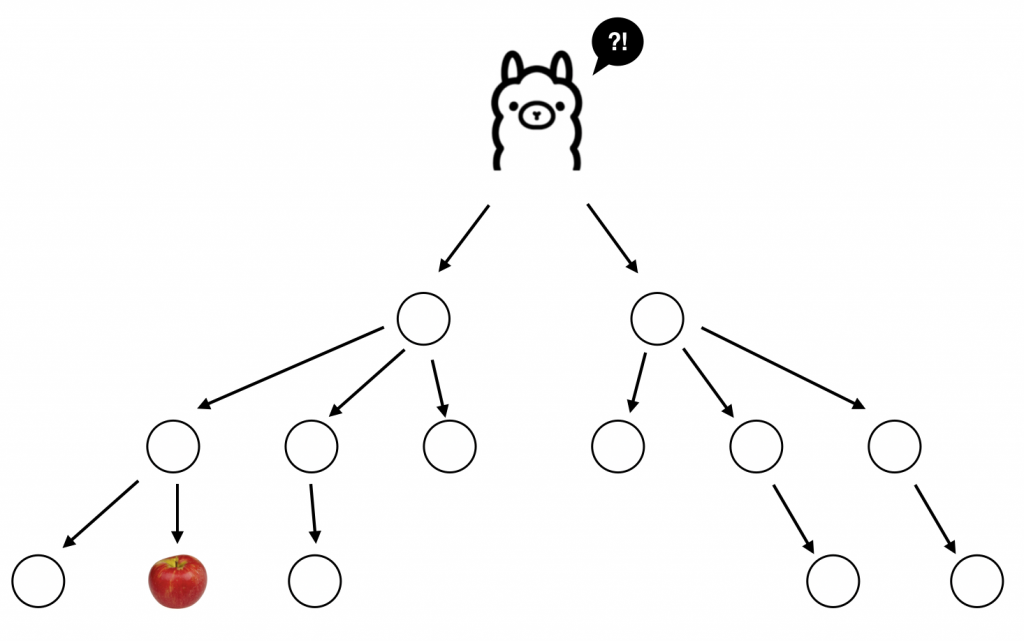

Effective reasoning requires combining multiple cognitive abilities: logical inference, knowledge retrieval, and combinatorial search. While Large Language Models (LLMs) show strong reasoning potential, they often rely on implicit recall rather than structured search strategies. Recently, there has been significant progress in scaling inference time compute to explore multiple reasoning avenues. This project proposes integrating algorithmic search techniques with LLMs to improve performance on complex tasks such as mathematical reasoning and multi-hop inference. We aim to refine search strategies, inspired by systems like DeepSeek (Zhang et al., 2025), and apply them to challenging benchmarks such as ARC (AI2 Reasoning Challenge) and reasoning on knowledge graphs (KGs).

We will have weekly meetings to address questions, discuss progress and think about future ideas.

Requirements

Strong programming skills (Python, etc.) and good knowledge of machine learning. Previous experience with PyTorch and other common deep-learning libraries is a plus. Previous experience with discrete mathematics and algorithms is also a plus.

Contact

Interested? Please reach out with a brief description of your motivation in the project, along with any relevant courses or prior projects (personal or academic) that demonstrate your background in the area.