The Forecaster’s Dilemma: Evaluating forecasts of extreme events

Accurate predictions of extreme events do not necessarily indicate the scientific superiority of the forecaster or the forecast method from which they originate. The way forecast evaluation is conducted in the media can thus pose a dilemma.

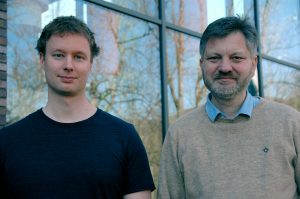

When it comes to extreme events, public discussion of forecasts often focuses on predictive performance. After the international financial crisis of 2007, for example, the public paid a great deal of attention to economists who had correctly predicted the crisis, attributing it to their superior predictive ability. However, restricting forecast evaluation to subsets of extreme observations has unexpected and undesired effects, and is bound to discredit even the most expert forecasts. In a recent article, statisticians Dr. Sebastian Lerch and Prof. Tilmann Gneiting (both affiliated with HITS and the Karlsruhe Institute of Technology), together with their coauthors from Norway and Italy, analyzed and explained this phenomenon and suggested potential remedies. The research team used theoretical arguments, simulation experiments and a real data study on economic variables. The article has just been published in the peer-reviewed journal Statistical Science. It is based on research funded by the Volkswagen Foundation.

Predicting calamities every time – a worthwhile strategy?

Forecast evaluation is often only conducted in the public arena if an extreme event has been observed; in particular, if forecasters have failed to predict an event with high economic or societal impact. An example of what this can mean for forecasters is the devastating L’Aquila earthquake in 2009 that caused 309 deaths. In the aftermath, six Italian seismologists were put on trial for not predicting the earthquake. They were found guilty of involuntary manslaughter and sentenced to six years in prison until the Supreme Court in Rome acquitted them of the charges.

But how can scientists and outsiders, such as the media, evaluate the accuracy of forecasts predicting extreme events? At first sight, the practice of selecting extreme observations while discarding non-extreme ones and proceeding on the basis of standard evaluation tools seems quite logical. Intuitively, accurate predictions on the subset of extreme observations may suggest superior predictive abilities. But limiting the analyzed data to selected subsets can be problematic. “In a nutshell, if forecast evaluation is conditional on observing a catastrophic event, predicting a disaster every time becomes a worthwhile strategy,” says Sebastian Lerch, member of the “Computational Statistics” group at HITS. Given that media attention tends to focus on extreme events, expert forecasts are bound to fail in the public eye, and it becomes tempting to base decision making on misguided inferential procedures. “We refer to this critical issue as the ‘forecaster’s dilemma,’” adds Tilmann Gneiting.

Avoiding the forecaster’s dilemma: Method is everything.

This predicament can be avoided if forecasts take the form of probability distributions, for which standard evaluation methods can be generalized to allow for specifically emphasizing extreme events. The paper uses economic forecasts of GDP growth and inflation rates in the United States between 1985 and 2011 to illustrate the forecaster’s dilemma and how these tools can be used to address it.

The results of the study are especially relevant for scientists seeking to evaluate the forecasts of their own methods and models, and for external third parties who need to choose between competing forecast providers, for example to produce hazard warnings or make financial decisions.

Although the research paper focused on an economic data set, the conclusions are important for many other applications: The forecast evaluation tools are currently being tested for use by national and international weather services.

Publication:

Lerch, S., Thorarinsdottir, T. L., Ravazzolo, F. and Gneiting, T. (2017). Forecaster’s

dilemma: Extreme events and forecast evaluation. Statistical Science, in press.

DOI: 10.1214/16-STS588

Link:

Scientific Contact:

Prof. Dr. Tilmann Gneiting

Computational Statistics (CST) group

HITS Heidelberg Institute for Theoretical Studies

Tilmann.gneiting@h.its.org

Schloss-Wolfsbrunnenweg 35

69118 Heidelberg

About HITS

HITS, the Heidelberg Institute for Theoretical Studies, was established in 2010 by physicist and SAP co-founder Klaus Tschira (1940-2015) and the Klaus Tschira Foundation as a private, non-profit research institute. HITS conducts basic research in the natural, mathematical, and computer sciences. Major research directions include complex simulations across scales, making sense of data, and enabling science via computational research. Application areas range from molecular biology to astrophysics. An essential characteristic of the Institute is interdisciplinarity, implemented in numerous cross-group and cross-disciplinary projects. The base funding of HITS is provided by the Klaus Tschira Foundation.